Pegasus Workflow Management System (WMS) simplifies running complex AI and machine learning (ML) workflows, especially in research, high-throughput (HTC), and high-performance computing (HPC) environments.

Pegasus Workflow Management System (WMS) simplifies running complex AI and machine learning (ML) workflows, especially in research, high-throughput (HTC), and high-performance computing (HPC) environments.

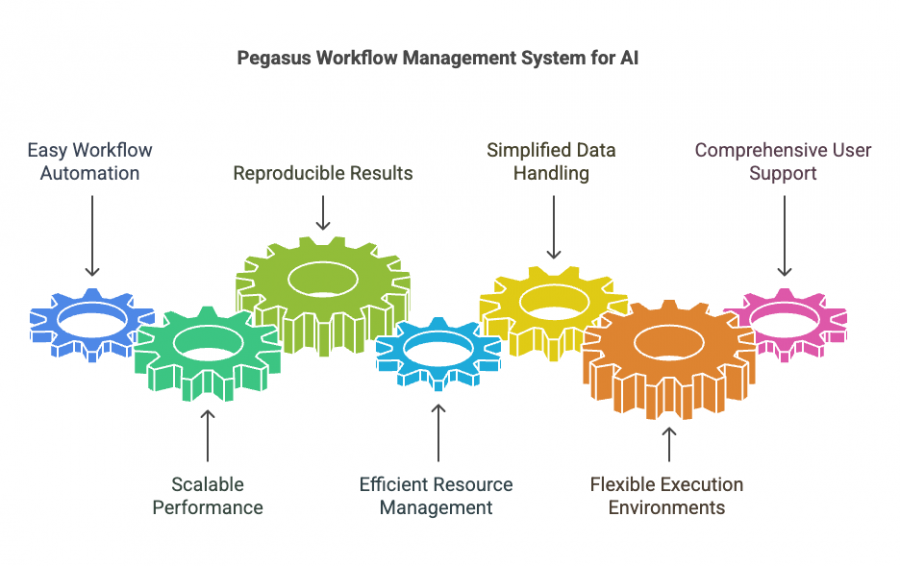

Why Use Pegasus for Your AI Workflows?

- Easy Workflow Automation

Pegasus automatically manages every step of your AI workflow—from data preparation and model training to hyperparameter tuning and evaluation. It ensures each step runs in the correct sequence without manual intervention. - Scalable Performance

Pegasus is built to handle resource-intensive AI tasks. It efficiently distributes your workloads across powerful computing resources, including clusters, cloud platforms, or hybrid setups. - Reproducible Results

Pegasus captures detailed logs, tracks data history, and clearly describes your workflows. This ensures your AI experiments can be consistently reproduced, which is essential for validation and collaboration. - Efficient Resource Management

Easily integrate Pegasus with popular resource schedulers such as HTCondor, SLURM, or Kubernetes. This helps manage your computing resources effectively, making your AI tasks quicker and more efficient. - Simplified Data Handling

AI tasks often deal with large datasets. Pegasus takes care of data transfer, storage, and cleanup across multiple systems, allowing you to focus on your research instead of data logistics. - Flexible Execution Environments

Whether you’re running workflows on your laptop, a dedicated HPC cluster, or cloud environments, Pegasus adapts easily to your project’s specific needs.

Comprehensive User Support

Pegasus provides extensive support to help you get started and troubleshoot your workflows:

- Weekly Office Hours: Join our regular sessions to ask questions and receive personalized guidance.

- Slack Channel: Engage with the Pegasus community and support team directly for quick assistance.

- Online Tutorials: Access detailed tutorials and guides designed to help you easily learn and implement workflows. You will find these self-guided tutorials in your $HOME directory under

ACCESS-Pegasus-Examplesafter logging in and starting a Jupyter notebook on ACCESS Pegasus.

Community Contributions

Pegasus encourages users to contribute workflows, improvements, and insights back to the community. Join our vibrant user community to share your experiences, collaborate, and help shape the future of Pegasus.

Integration Capabilities

Pegasus seamlessly integrates with popular machine learning frameworks like TensorFlow, PyTorch, and scikit-learn, as well as container technologies such as Docker and Singularity, making it easy to incorporate into your existing workflows and software stacks.

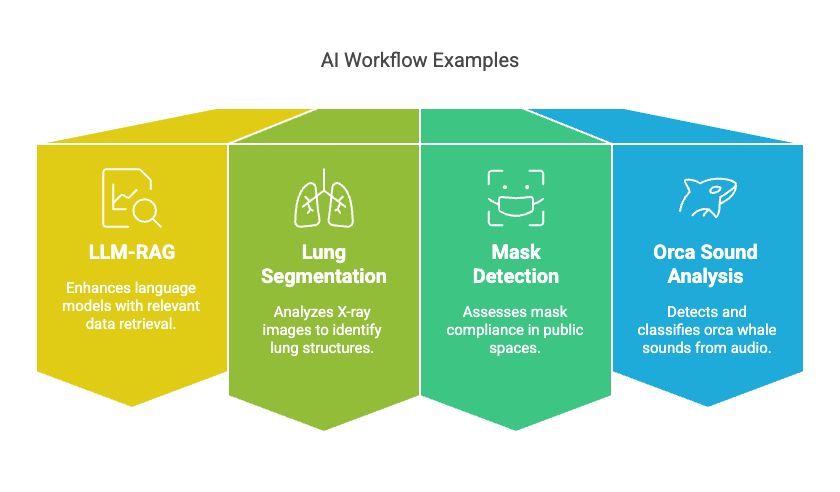

Real-world AI Workflow Examples

Our AI workflow examples are easily accessible via Pegasus ACCESS using Jupyter Notebooks. All you need is an ACCESS account—no allocation required.

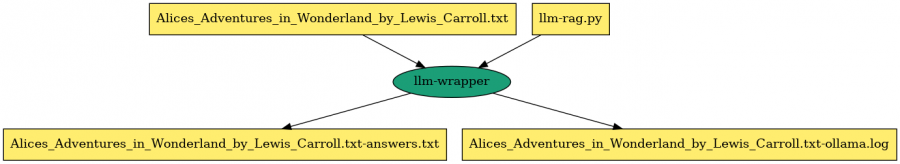

LLM-RAG (Retrieval Augmented Generation)Enhances large language models by retrieving relevant information from databases to improve accuracy and context-awareness. [ACCESS Pegasus Example] |

|

|

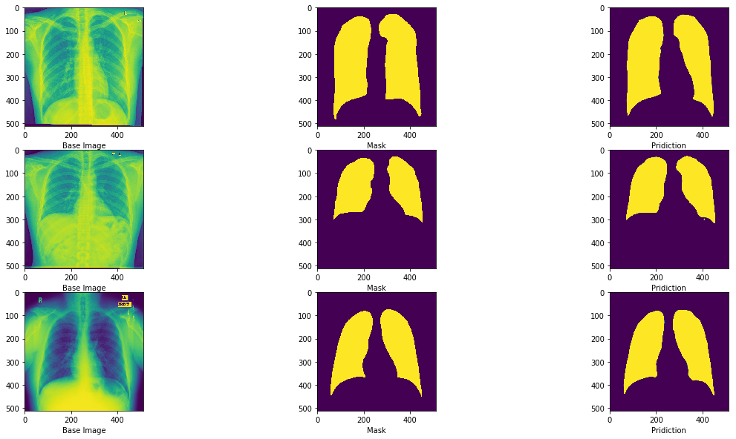

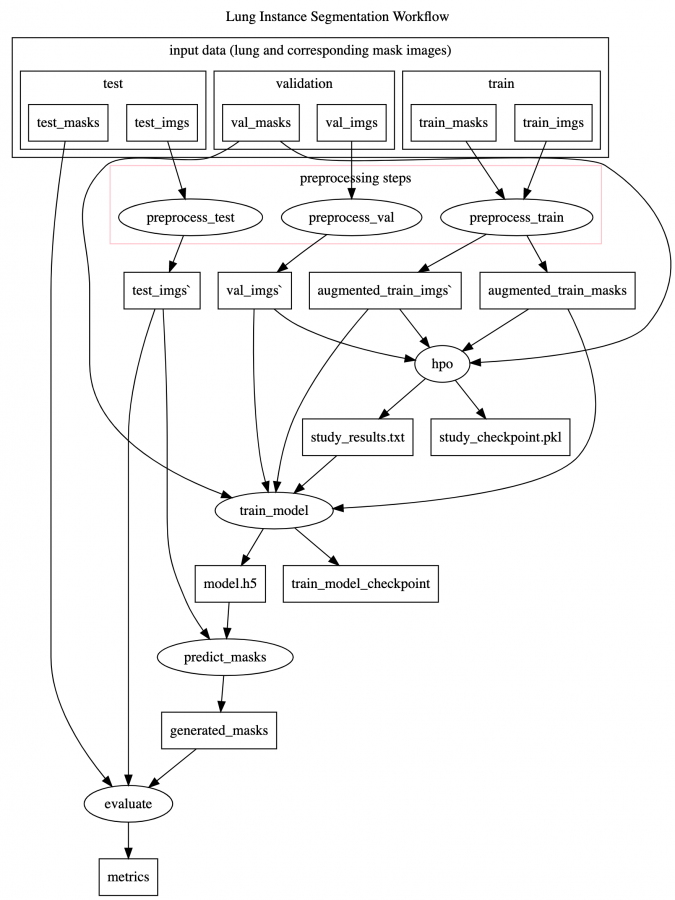

Lung SegmentationUtilizes supervised learning to analyze X-ray images and automatically identify lung structures using advanced neural network models. [GitHub] [ACCESS Pegasus Example] |

|

|

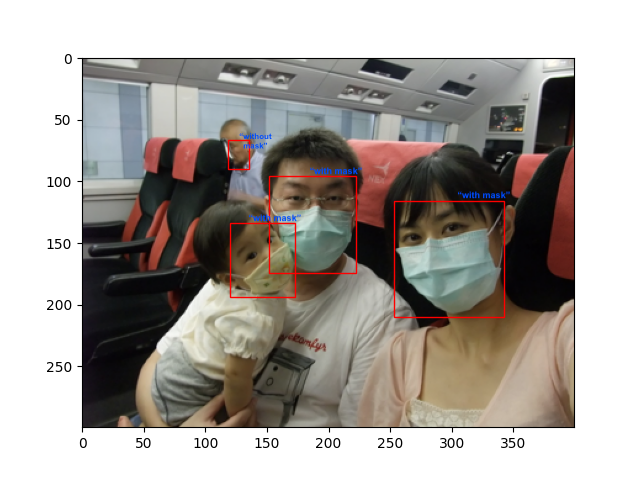

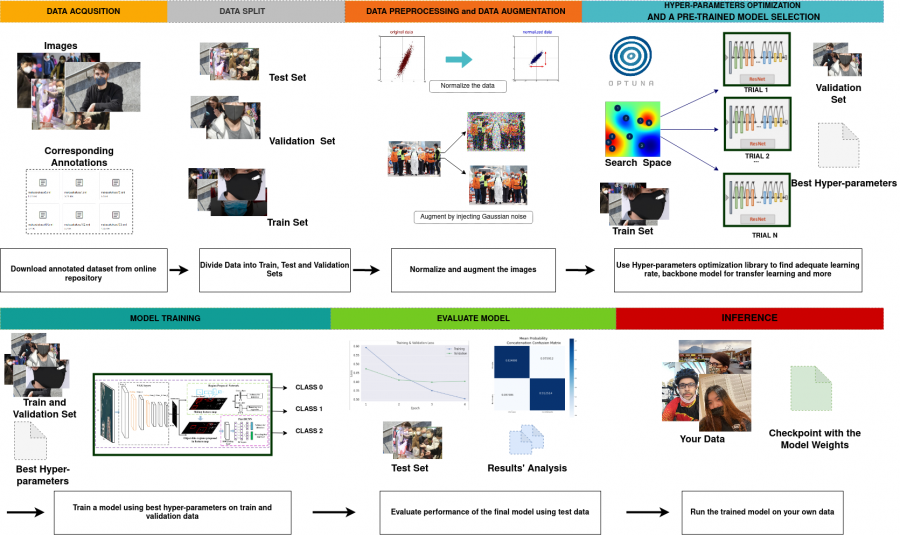

Mask DetectionEmploys deep learning to assess mask-wearing compliance in public spaces, supporting health and safety initiatives. [GitHub] [ACCESS Pegasus Example] |

|

|

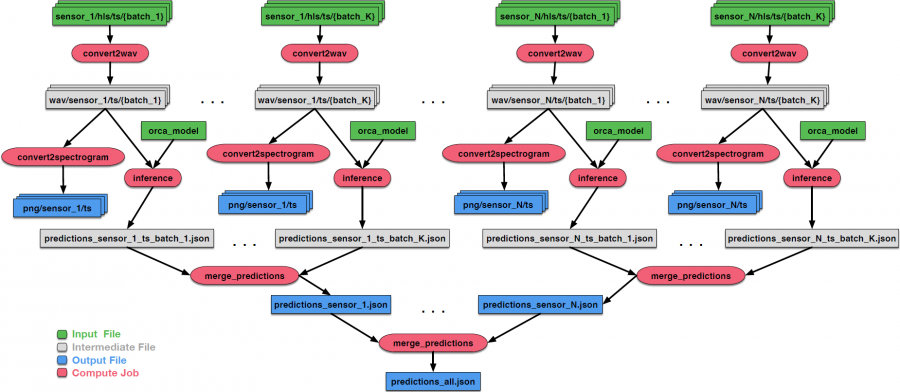

Orca SoundAnalyzes underwater audio data to detect and classify orca whale sounds using trained machine learning models. [GitHub] [ACCESS Pegasus Example] |

|

|

Explore these examples and start automating your AI workflows with Pegasus today!