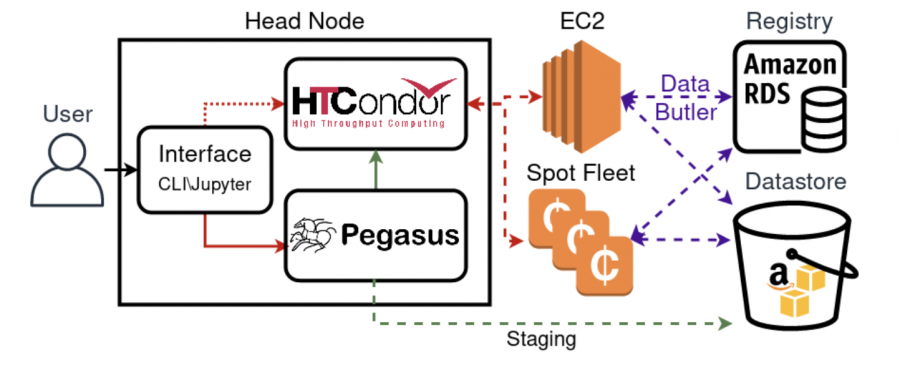

The Data Intensive Research in Astrophysics and Cosmology institute (DIRAC), University of Washington (WA) Astronomy Department, Legacy Survey of Space and Time (LSST) and Amazon Web Services (AWS) joined forces to develop a proof-of-concept (PoC) leveraging cloud resources to processing astronomical images at scale. The AWS PoC’s goal was to implement required functionality that would enable Vera C. Rubin Observatory LSST Science Pipelines execution on AWS. The AWS PoC leveraged HTCondor Annex and Pegasus to successfully create and execute workflows in heterogeneous dynamically resizable compute clusters in order to determine feasibility, performance and provide initial cost estimates of such a system.

To be able to scale, first we need a way to procure, monitor and manage EC2 compute instances. HTCondor Annex is an addition to HTCondor that allows users to do exactly that. Procured instances are automatically added to the HTCondor compute pool and then deallocated after some set time has elapsed or if they idle for longer than some set time.

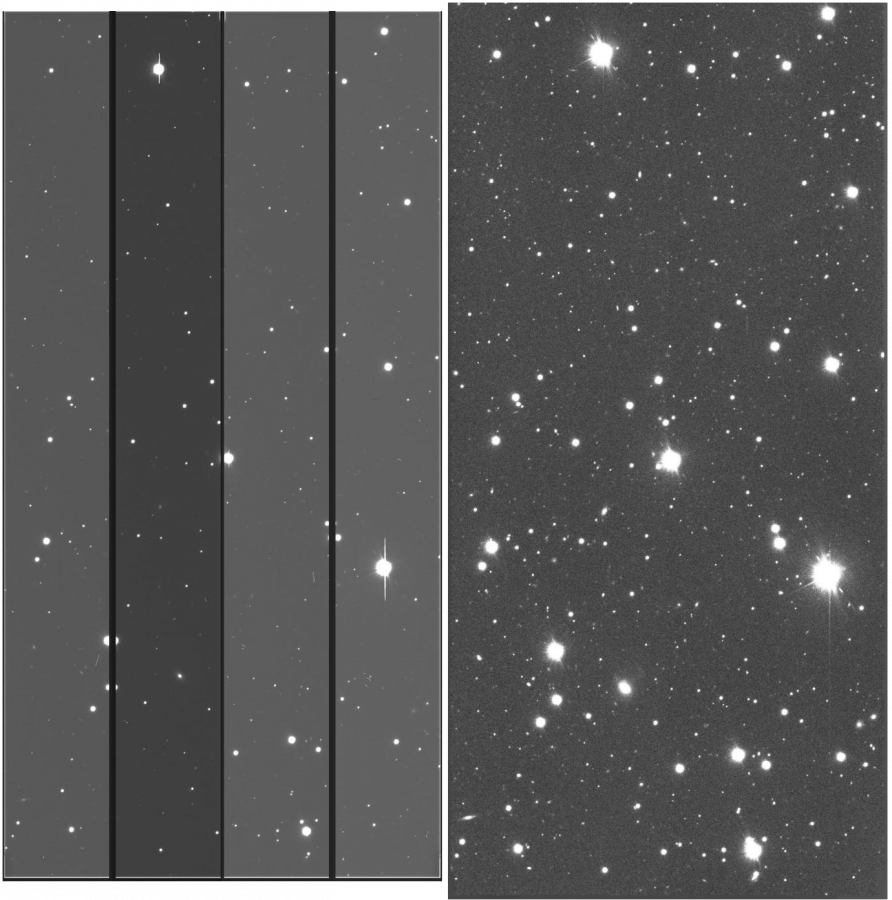

We convert the Quantum Graph to an abstract workflow using Pegasus API. The abstract workflow is converted to an executable workflow in the Pegasus planning stage and then executed by HTCondor’s DAGMan. We tested the system by running shortened Data Release Production (DRP) pipelines, products of which are calibrated images and source catalogs, on HyperSuprime Camera data. The DRP workflow is the pipeline Rubin will run on their data in production. The dataset represents approximately 11% of the number of nightly observations and is equivalent to ~2% of Rubin nightly data volume. In absolute terms the dataset consists of ~0.2TB from which ~3TB of output data is produced. For technical reasons the tests include an initialization Task that usually takes 25-30 minutes to execute and in principle would not have to be executed for runs that subsequently add to the same dataset collection.

Scientist: Dino Bektešević, University of Washington, LSST/Rubin Observatory project