8. Submit Directory Details

This chapter describes the submit directory content after Pegasus has planned a workflow. Pegasus takes in an Abstract Workflow and generates an executable workflow (DAG) in the submit directory.

This document also describes the various Replica Selection Strategies in Pegasus.

8.1. Layout

Each executable workflow is associated with a submit directory, and includes the following:

<wflabel-wfindex>.dag

This is the Condor DAGMman dag file corresponding to the executable workflow generated by Pegasus. The dag file describes the edges in the DAG and information about the jobs in the DAG. Pegasus generated .dag file usually contains the following information for each job

The job submit file for each job in the DAG.

The post script that is to be invoked when a job completes. This is usually located at $PEGASUS_HOME/bin/exitpost and parses the kickstart record in the job’s.out file and determines the exitcode.

JOB RETRY - the number of times the job is to be retried in case of failure. In Pegasus, the job postscript exits with a non zero exitcode if it determines a failure occurred.

<wflabel-wfindex>.dag.dagman.out

When a DAG ( .dag file ) is executed by Condor DAGMan , the DAGMan writes out it’s output to the <daxlabel-wfindex>.dag.dagman.out file . This file tells us the progress of the workflow, and can be used to determine the status of the workflow. Most of pegasus tools mine the dagman.out or jobstate.log to determine the progress of the workflows.

<wflabel-wfindex>.static.bp

This file contains netlogger events that link jobs in the DAG with the jobs in the Abstract Workflow. This file is parsed by pegasus-monitord when a workflow starts and populates to the stampede backend.

<wflabel-wfindex>.stampede.db

This is the stampede backend (a sqlite database) to which pegasus-monitord populates all the runtime provenance information from workflow dagman.out and job .out and .err files.

<wflabel-wfindex>.replicas.db

This is the default output replica catalog (a sqlite database) to which the registration jobs populate output file locations and associated metadata.

<wflabel-wfindex>.replica.store

This is a file based replica catalog, that only lists file locations are mentioned in the Abstract Workflow in the Replica Catalog Section, and is written out by the planner at mapping time. This is used to pass locations of files mentioned in the parent workflow to a sub workflow in case of hierarchical workflows.

<wflabel-wfindex>.cache

This is a cache file generated by the planner that records where all the files in the workflow will be placed on the staging site, as the workflow executes. This is used in hierarchical workflows to pass locations of files in the parent workflow to when planner is invoked on the sub workflows.

<wflabel-wfindex>.cache.meta

This is a file populated by pegasus-exitcode to record the checksum information gleamed from parsing the kickstart output present in the job.out.* files. This is used in hierarchical workflows to pass checksum information of files in the parent workflow to when planner is invoked on the sub workflows.

<wflabel-wfindex>.metadata

This is a worflow level metadata json formatted file that is written out by the planner at mapping time, and lists all metadata for workflow, jobs and files specified in the Abstract Workflow.

<wflabel-wfindex>.metrics

This is a json formatted file generated by the planner, that includes some planning metrics and workflow level metrics such as different type of jobs and files.

<wflabel-wfindex>.notify

This file contains all the notifications that need to be set for the workflow and the jobs in the executable workflow. The format of notify file is described here .

<wflabel-wfindex>.dot

Pegasus creates a dot file for the executable workflow in addition to the .dag file. This can be used to visualize the executable workflow using the dot program.

<wflabel-wfindex>-concrete.png

Pegasus creates a png file visualizing the the output executable workflow, using the program pegasus-graphviz. The png file is created as long as the dot program is installed and the number of jobs in the executable workflow are less than or equal to 150. This png file is consistent with the dot file created for the workflow.

<wflabel-wfindex>-abstract.png

Pegasus creates a png file visualizing the the input abstract workflow, using the program pegasus-graphviz. The image only lists the jobs making up the workflow and does not include files. The png file is created as long as the dot program is installed and the number of jobs in the absract workflow are less than or equal to 100.

<wflabel-wfindex>-abstract-files.png

Pegasus creates a png file visualizing the the input abstract workflow, using the program pegasus-graphviz. The image includes both the jobs and the files making up the workflow. The png file is created as long as the dot program is installed and the number of jobs in the abstract workflow are less than or equal to 100 and the number of files referred in the workflow is less than or equal to 300.

jobstate.log

The jobstate.log file is written out by the pegasus-monitord daemon that is launched when a workflow is submitted for execution by pegasus-run. The pegasus-monitord daemon parses the dagman.out file and writes out the jobstate.log that is easier to parse. The jobstate.log captures the various states through which a job goes during the workflow. There are other monitoring related files that are explained in the monitoring chapter.

braindump.yml

Contains information about pegasus version, dax file, dag file, dax label.

<job>.sub

Each job in the executable workflow is associated with it’s own submit file. The submit file tells Condor how to execute the job.

<job>.out.00n

The stdout of the executable referred in the job submit file. In Pegasus, most jobs are launched via kickstart. Hence, this file contains the kickstart provenance record that captures runtime provenance on the remote node where the job was executed. n varies from 1-N where N is the JOB RETRY value in the .dag file. The exitpost executable is invoked on the <job>.out file and it moves the <job>.out to <job>.out.00n so that the the job’s .out files are preserved across retries.

<job>.err.00n

The stderr of the executable referred in the job submit file. In case of Pegasus, mostly the jobs are launched via kickstart. Hence, this file contains stderr of kickstart. This is usually empty unless there in an error in kickstart e.g. kickstart segfaults , or kickstart location specified in the submit file is incorrect. The exitpost executable is invoked on the <job>.out file and it moves the <job>.err to <job>.err.00n so that the the job’s .out files are preserved across retries.

<job>.meta

This is a file created at runtime when pegasus-exitcode parses the kickstart output in the job.out file. This file records metadata and checksum information for output files created by the job and recorded by pegasus-kickstart.

8.2. HTCondor DAGMan File

The Condor DAGMan file ( .dag ) is the input to Condor DAGMan ( the workflow executor used by Pegasus ) .

Pegasus generated .dag file usually contains the following information for each job:

The job submit file for each job in the DAG.

The post script that is to be invoked when a job completes. This is usually found in $PEGASUS_HOME/bin/exitpost and parses the kickstart record in the job’s .out file and determines the exitcode.

JOB RETRY - the number of times the job is to be retried in case of failure. In case of Pegasus, job postscript exits with a non zero exitcode if it determines a failure occurred.

The pre script to be invoked before running a job. This is usually for the dax jobs in the DAX. The pre script is pegasus-plan invocation for the subdax.

In the last section of the DAG file the relations between the jobs ( that identify the underlying DAG structure ) are highlighted.

8.3. Sample Condor DAG File

#####################################################################

# PEGASUS WMS GENERATED DAG FILE

# DAG blackdiamond

# Index = 0, Count = 1

######################################################################

JOB create_dir_blackdiamond_0_isi_viz create_dir_blackdiamond_0_isi_viz.sub

SCRIPT POST create_dir_blackdiamond_0_isi_viz /pegasus/bin/pegasus-exitcode \

/submit-dir/create_dir_blackdiamond_0_isi_viz.out

RETRY create_dir_blackdiamond_0_isi_viz 3

JOB create_dir_blackdiamond_0_local create_dir_blackdiamond_0_local.sub

SCRIPT POST create_dir_blackdiamond_0_local /pegasus/bin/pegasus-exitcode

/submit-dir/create_dir_blackdiamond_0_local.out

JOB pegasus_concat_blackdiamond_0 pegasus_concat_blackdiamond_0.sub

JOB stage_in_local_isi_viz_0 stage_in_local_isi_viz_0.sub

SCRIPT POST stage_in_local_isi_viz_0 /pegasus/bin/pegasus-exitcode \

/submit-dir/stage_in_local_isi_viz_0.out

JOB chmod_preprocess_ID000001_0 chmod_preprocess_ID000001_0.sub

SCRIPT POST chmod_preprocess_ID000001_0 /pegasus/bin/pegasus-exitcode \

/submit-dir/chmod_preprocess_ID000001_0.out

JOB preprocess_ID000001 preprocess_ID000001.sub

SCRIPT POST preprocess_ID000001 /pegasus/bin/pegasus-exitcode \

/submit-dir/preprocess_ID000001.out

JOB subdax_black_ID000002 subdax_black_ID000002.sub

SCRIPT PRE subdax_black_ID000002 /pegasus/bin/pegasus-plan \

-Dpegasus.user.properties=/submit-dir/./dag_1/test_ID000002/pegasus.3862379342822189446.properties\

-Dpegasus.log.*=/submit-dir/subdax_black_ID000002.pre.log \

-Dpegasus.dir.exec=app_domain/app -Dpegasus.dir.storage=duncan -Xmx1024 -Xms512\

--dir /pegasus-features/dax-3.2/dags \

--relative-dir user/pegasus/blackdiamond/run0005/user/pegasus/blackdiamond/run0005/./dag_1 \

--relative-submit-dir user/pegasus/blackdiamond/run0005/./dag_1/test_ID000002\

--basename black --sites dax_site \

--output local --force --nocleanup \

--verbose --verbose --verbose --verbose --verbose --verbose --verbose \

--verbose --monitor --deferred --group pegasus --rescue 0 \

--dax /submit-dir/./dag_1/test_ID000002/dax/blackdiamond_dax.xml

JOB stage_out_local_isi_viz_0_0 stage_out_local_isi_viz_0_0.sub

SCRIPT POST stage_out_local_isi_viz_0_0 /pegasus/bin/pegasus-exitcode /submit-dir/stage_out_local_isi_viz_0_0.out

SUBDAG EXTERNAL subdag_black_ID000003 /Users/user/Pegasus/work/dax-3.2/black.dag DIR /duncan/test

JOB clean_up_stage_out_local_isi_viz_0_0 clean_up_stage_out_local_isi_viz_0_0.sub

SCRIPT POST clean_up_stage_out_local_isi_viz_0_0 /lfs1/devel/Pegasus/pegasus/bin/pegasus-exitcode \

/submit-dir/clean_up_stage_out_local_isi_viz_0_0.out

JOB clean_up_preprocess_ID000001 clean_up_preprocess_ID000001.sub

SCRIPT POST clean_up_preprocess_ID000001 /lfs1/devel/Pegasus/pegasus/bin/pegasus-exitcode \

/submit-dir/clean_up_preprocess_ID000001.out

PARENT create_dir_blackdiamond_0_isi_viz CHILD pegasus_concat_blackdiamond_0

PARENT create_dir_blackdiamond_0_local CHILD pegasus_concat_blackdiamond_0

PARENT stage_out_local_isi_viz_0_0 CHILD clean_up_stage_out_local_isi_viz_0_0

PARENT stage_out_local_isi_viz_0_0 CHILD clean_up_preprocess_ID000001

PARENT preprocess_ID000001 CHILD subdax_black_ID000002

PARENT preprocess_ID000001 CHILD stage_out_local_isi_viz_0_0

PARENT subdax_black_ID000002 CHILD subdag_black_ID000003

PARENT stage_in_local_isi_viz_0 CHILD chmod_preprocess_ID000001_0

PARENT stage_in_local_isi_viz_0 CHILD preprocess_ID000001

PARENT chmod_preprocess_ID000001_0 CHILD preprocess_ID000001

PARENT pegasus_concat_blackdiamond_0 CHILD stage_in_local_isi_viz_0

######################################################################

# End of DAG

######################################################################

8.4. Kickstart Record

Kickstart is a light weight C executable that is shipped with the pegasus worker package. All jobs are launced via Kickstart on the remote end, unless explicitly disabled at the time of running pegasus-plan.

Kickstart does not work with:

HTCondor Standard Universe Jobs

MPI Jobs

Pegasus automatically disables kickstart for the above jobs. In those cases, the dagman.out and log can be used for higher level job duration and usage.

Kickstart captures useful runtime provenance information about the job launched by it on the remote note, and puts in an YAML record that it writes to its own stdout. The stdout appears in the workflow submit directory as <job>.out.0NN, rotated for each job retry.

8.5. Reading a Kickstart Output File

Starting with Pegasus 5.0 pegasus-kickstart now writes out the runtime provenance as a YAML document instead of the earlier XML formatted document. The kickstart file below has the following fields highlighted:

The host on which the job executed and the ipaddress of that host

The duration (seconds) and start time of the job. Start time is in reference to the clock on the remote node where the job is executed.

The exitcode with which the job executed

The arguments with which the job was launched.

The directory in which the job executed on the remote site

The stdout of the job

The stderr of the job

The environment of the job

Resource usage

Time: utime (user space CPU time, seconds) and stime (system CPU time, seconds)

Memory: maxrss (maximum resident set size, KB)

File statistics

Filesize: size (B)

Checksum: sha256

- invocation: True

version: 3.0

start: 2020-06-12T22:25:51.876-07:00

duration: 60.039

transformation: "diamond::preprocess:4.0"

derivation: "ID0000001"

resource: "CCG"

wf-label: "blackdiamond"

wf-stamp: "2020-06-12T22:24:09-07:00"

interface: eth0

hostaddr: 128.9.36.72

hostname: compute-2.isi.edu

pid: 10187

uid: 579

user: ptesting

gid: 100

group: users

umask: 0o0022

mainjob:

start: 2020-06-12T22:25:51.913-07:00

duration: 60.002

pid: 10188

usage:

utime: 59.993

stime: 0.002

maxrss: 1312

minflt: 394

majflt: 0

nswap: 0

inblock: 0

outblock: 16

msgsnd: 0

msgrcv: 0

nsignals: 0

nvcsw: 2

nivcsw: 326

status:

raw: 0

regular_exitcode: 0

executable:

file_name: /var/lib/condor/execute/dir_9997/pegasus.nInvqOjMu/diamond-preprocess-4_0

mode: 0o100755

size: 82976

inode: 369207696

nlink: 1

blksize: 4096

blocks: 168

mtime: 2020-06-12T22:25:51-07:00

atime: 2020-06-12T22:25:51-07:00

ctime: 2020-06-12T22:25:51-07:00

uid: 579

user: ptesting

gid: 100

group: users

argument_vector:

- -a

- preprocess

- -T

- 60

- -i

- f.a

- -o

- f.b1

- f.b2

procs:

jobids:

condor: 9774913.0

gram: https://obelix.isi.edu:49384/16866322196481424206/5750061617434002842/

cwd: /var/lib/condor/execute/dir_9997/pegasus.nInvqOjMu

usage:

utime: 0.004

stime: 0.034

maxrss: 816

minflt: 1358

majflt: 1

nswap: 0

inblock: 544

outblock: 0

msgsnd: 0

msgrcv: 0

nsignals: 0

nvcsw: 4

nivcsw: 3

machine:

page-size: 4096

uname_system: linux

uname_nodename: compute-2.isi.edu

uname_release: 3.10.0-1062.4.1.el7.x86_64

uname_machine: x86_64

ram_total: 7990140

ram_free: 3355064

ram_shared: 0

ram_buffer: 0

swap_total: 0

swap_free: 0

cpu_count: 4

cpu_speed: 2600

cpu_vendor: GenuineIntel

cpu_model: Intel(R) Xeon(R) CPU E5-2690 v4 @ 2.60GHz

load_min1: 0.02

load_min5: 0.06

load_min15: 0.06

procs_total: 215

procs_running: 1

procs_sleeping: 214

procs_vmsize: 42446148

procs_rss: 1722380

task_total: 817

task_running: 1

task_sleeping: 816

files:

f.b2:

lfn: "f.b2"

file_name: /var/lib/condor/execute/dir_9997/pegasus.nInvqOjMu/f.b2

mode: 0o100644

size: 114

inode: 369207699

nlink: 1

blksize: 4096

blocks: 8

mtime: 2020-06-12T22:25:51-07:00

atime: 2020-06-12T22:25:51-07:00

ctime: 2020-06-12T22:25:51-07:00

uid: 579

user: ptesting

gid: 100

group: users

output: True

sha256: deac67f380112ecfa4b65879846a5f27abd64c125c25f8958cb1be44decf567f

checksum_timing: 0.019

f.b1:

lfn: "f.b1"

file_name: /var/lib/condor/execute/dir_9997/pegasus.nInvqOjMu/f.b1

mode: 0o100644

size: 114

inode: 369207698

nlink: 1

blksize: 4096

blocks: 8

mtime: 2020-06-12T22:25:51-07:00

atime: 2020-06-12T22:25:51-07:00

ctime: 2020-06-12T22:25:51-07:00

uid: 579

user: ptesting

gid: 100

group: users

output: True

sha256: deac67f380112ecfa4b65879846a5f27abd64c125c25f8958cb1be44decf567f

checksum_timing: 0.018

stdin:

file_name: /dev/null

mode: 0o20666

size: 0

inode: 1034

nlink: 1

blksize: 4096

blocks: 0

mtime: 2019-10-29T08:35:24-07:00

atime: 2019-10-29T08:35:24-07:00

ctime: 2019-10-29T08:35:24-07:00

uid: 0

user: root

gid: 0

group: root

stdout:

temporary_name: /var/lib/condor/execute/dir_9997/ks.out.1uMt3U

descriptor: 3

mode: 0o100600

size: 0

inode: 302035961

nlink: 1

blksize: 4096

blocks: 0

mtime: 2020-06-12T22:25:51-07:00

atime: 2020-06-12T22:25:51-07:00

ctime: 2020-06-12T22:25:51-07:00

uid: 579

user: ptesting

gid: 100

group: users

data_truncated: false

data: |

Tue Oct 6 15:25:25 PDT 2020

stderr:

temporary_name: /var/lib/condor/execute/dir_9997/ks.err.ict5LD

descriptor: 4

mode: 0o100600

size: 0

inode: 302035962

nlink: 1

blksize: 4096

blocks: 0

mtime: 2020-06-12T22:25:51-07:00

atime: 2020-06-12T22:25:51-07:00

ctime: 2020-06-12T22:25:51-07:00

uid: 579

user: ptesting

gid: 100

group: users

metadata:

temporary_name: /var/lib/condor/execute/dir_9997/ks.meta.TplHum

descriptor: 5

mode: 0o100600

size: 0

inode: 302035963

nlink: 1

blksize: 4096

blocks: 0

mtime: 2020-06-12T22:25:51-07:00

atime: 2020-06-12T22:25:51-07:00

ctime: 2020-06-12T22:25:51-07:00

uid: 579

user: ptesting

gid: 100

group: users

Note

pegasus-kickstart writes out the job environment in case job exits with failure (non zero exitcode). To see job environment for a successful job, pass -f option to pegasus-kickstart.

8.6. Jobstate.Log File

The jobstate.log file logs the various states that a job goes through during workflow execution. It is created by the pegasus-monitord daemon that is launched when a workflow is submitted to Condor DAGMan by pegasus-run. pegasus-monitord parses the dagman.out file and writes out the jobstate.log file, the format of which is more amenable to parsing.

Note

The jobstate.log file is not created if a user uses condor_submit_dag to submit a workflow to Condor DAGMan.

The jobstate.log file can be created after a workflow has finished executing by running pegasus-monitord on the .dagman.out file in the workflow submit directory.

Below is a snippet from the jobstate.log for a single job executed via condorg:

1239666049 create_dir_blackdiamond_0_isi_viz SUBMIT 3758.0 isi_viz - 1

1239666059 create_dir_blackdiamond_0_isi_viz EXECUTE 3758.0 isi_viz - 1

1239666059 create_dir_blackdiamond_0_isi_viz GLOBUS_SUBMIT 3758.0 isi_viz - 1

1239666059 create_dir_blackdiamond_0_isi_viz GRID_SUBMIT 3758.0 isi_viz - 1

1239666064 create_dir_blackdiamond_0_isi_viz JOB_TERMINATED 3758.0 isi_viz - 1

1239666064 create_dir_blackdiamond_0_isi_viz JOB_SUCCESS 0 isi_viz - 1

1239666064 create_dir_blackdiamond_0_isi_viz POST_SCRIPT_STARTED - isi_viz - 1

1239666069 create_dir_blackdiamond_0_isi_viz POST_SCRIPT_TERMINATED 3758.0 isi_viz - 1

1239666069 create_dir_blackdiamond_0_isi_viz POST_SCRIPT_SUCCESS - isi_viz - 1

Each entry in jobstate.log has the following:

The ISO timestamp for the time at which the particular event happened.

The name of the job.

The event recorded by DAGMan for the job.

The condor id of the job in the queue on the submit node.

The pegasus site to which the job is mapped.

The job time requirements from the submit file.

The job submit sequence for this workflow.

STATE/EVENT |

DESCRIPTION |

SUBMIT |

job is submitted by condor schedd for execution. |

EXECUTE |

condor schedd detects that a job has started execution. |

GLOBUS_SUBMIT |

the job has been submitted to the remote resource. It’s only written for GRAM jobs (i.e. gt2 and gt4). |

GRID_SUBMIT |

same as GLOBUS_SUBMIT event. The ULOG_GRID_SUBMIT event is written for all grid universe jobs./ |

JOB_TERMINATED |

job terminated on the remote node. |

JOB_SUCCESS |

job succeeded on the remote host, condor id will be zero (successful exit code). |

JOB_FAILURE |

job failed on the remote host, condor id will be the job’s exit code. |

POST_SCRIPT_STARTED |

post script started by DAGMan on the submit host, usually to parse the kickstart output |

POST_SCRIPT_TERMINATED |

post script finished on the submit node. |

POST_SCRIPT_SUCCESS | POST_SCRIPT_FAILURE |

post script succeeded or failed. |

There are other monitoring related files that are explained in the monitoring chapter.

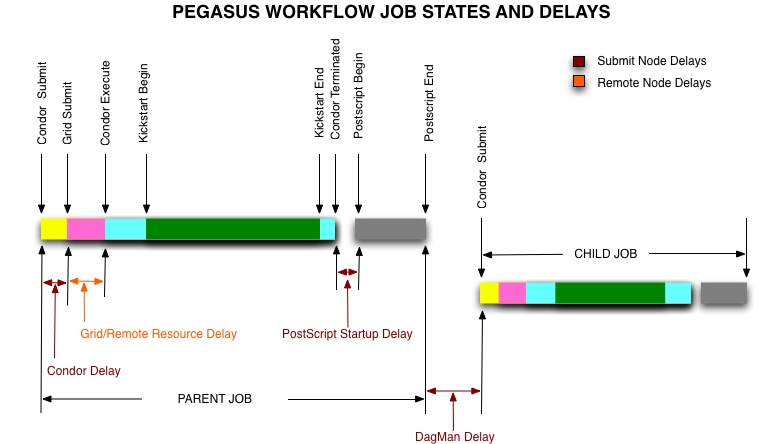

8.7. Pegasus Workflow Job States and Delays

The various job states that a job goes through ( as caputured in the dagman.out and jobstate.log file) during it’s lifecycle are illustrated below. The figure below highlights the various local and remote delays during job lifecycle.

8.8. Braindump File

The braindump file is created per workflow in the submit file and contains metadata about the workflow.

KEY |

DESCRIPTION |

user |

the username of the user that ran pegasus-plan |

grid_dn |

the Distinguished Name in the proxy |

submit_hostname |

the hostname of the submit host |

root_wf_uuid |

the workflow uuid of the root workflow |

wf_uuid |

the workflow uuid of the current workflow i.e the one whose submit directory the braindump file is. |

dax |

the path to the dax file |

dax_label |

the label attribute in the adag element of the dax |

dax_index |

the index in the dax. |

dax_version |

the version of the DAX schema that DAX referred to. |

pegasus_wf_name |

the workflow name constructed by pegasus when planning |

timestamp |

the timestamp when planning occured |

basedir |

the base submit directory |

submit_dir |

the full path for the submit directory |

properties |

the full path to the properties file in the submit directory |

planner |

the planner used to construct the executable workflow. always pegasus |

planner_version |

the versions of the planner |

pegasus_build |

the build timestamp |

planner_arguments |

the arguments with which the planner is invoked. |

jsd |

the path to the jobstate file |

rundir |

the rundir in the numbering scheme for the submit directories |

pegasushome |

the root directory of the pegasus installation |

vogroup |

the vo group to which the user belongs to. Defaults to pegasus |

condor_log |

the full path to condor common log in the submit directory |

notify |

the notify file that contains any notifications that need to be sent for the workflow. |

dag |

the basename of the dag file created |

type |

the type of executable workflow. Can be dag | shell| pmc |

A Sample Braindump File is displayed below:

user vahi

grid_dn null

submit_hostname obelix

root_wf_uuid a4045eb6-317a-4710-9a73-96a745cb1fe8

wf_uuid a4045eb6-317a-4710-9a73-96a745cb1fe8

dax /data/scratch/vahi/examples/synthetic-scec/Test.dax

dax_label Stampede-Test

dax_index 0

dax_version 3.3

pegasus_wf_name Stampede-Test-0

timestamp 20110726T153746-0700

basedir /data/scratch/vahi/examples/synthetic-scec/dags

submit_dir /data/scratch/vahi/examples/synthetic-scec/dags/vahi/pegasus/Stampede-Test/run0005

properties pegasus.6923599674234553065.properties

planner /data/scratch/vahi/software/install/pegasus/default/bin/pegasus-plan

planner_version 3.1.0cvs

pegasus_build 20110726221240Z

planner_arguments "--conf ./conf/properties --dax Test.dax --sites local --output local --dir dags --force --submit "

jsd jobstate.log

rundir run0005

pegasushome /data/scratch/vahi/software/install/pegasus/default

vogroup pegasus

condor_log Stampede-Test-0.log

notify Stampede-Test-0.notify

dag Stampede-Test-0.dag

type dag

8.9. Pegasus static.bp File

Pegasus creates a workflow.static.bp file that links jobs in the DAG with the jobs in the DAX. The contents of the file are in netlogger format. The purpose of this file is to be able to link an invocation record of a task to the corresponding job in the DAX

The workflow is replaced by the name of the workflow i.e. same prefix as the .dag file

In the file there are five types of events:

task.info

This event is used to capture information about all the tasks in the DAX( abstract workflow)

task.edge

This event is used to capture information about the edges between the tasks in the DAX ( abstract workflow )

job.info

This event is used to capture information about the jobs in the DAG ( executable workflow generated by Pegasus )

job.edge

This event is used to capture information about edges between the jobs in the DAG ( executable workflow ).

wf.map.task_job

This event is used to associate the tasks in the DAX with the corresponding jobs in the DAG.