Summit is a leadership-class computing system for open science that is hosted at the Oak Ridge National Laboratory. Summit, launched in 2018, delivers 8 times the computational performance of Titan’s 18,688 nodes, using only 4,608 nodes. Its architecture with the associated GPU resources, makes it an attractive resource for scientists to run their application workflows at scale. However, creating a working workflow submission environment from scratch may require a lot of effort to debug and deploy. At the same time access to Summit’s resources are tightly controlled by two factor authentication, making it difficult to submit jobs remotely in an automated fashion.

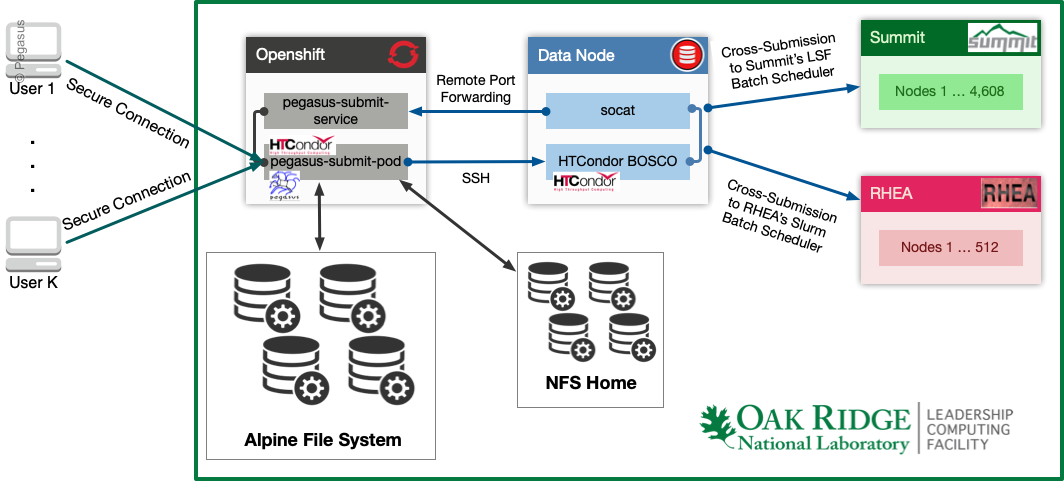

Over the past year, the Pegasus Team has been working with the OLCF staff to lower the barriers for making the OLCF compute resources available for users. The OLCF team provides an Openshift/Kubernetes based infrastructure within their DMZ that facilitates access to the various computing resources at OLCF. Using this service, users have an option to spin up on demand a “Pod” with one or more containers tightly configured for their workflows and applications. Via annotations, the Pods running on this cluster inherit the ability to submit jobs using the local resource managers and schedulers at OLCF, such as LSF and SLURM. We have built on top of this infrastructure and are now happy to provide our users with a new way of creating Pegasus workflow submission environments at OLCF, using containers. Users with allocations at OLCF can spin up their own Pegasus enabled containers on the OLCF Marble Kubernetes Cluster and create fully functional workflow submit nodes that can be used for job submissions to Summit.

In this Pegasus deployment the GPFS and NFS mounts are attached to the pegasus-submit-pod during instantiation, while submissions are handled on the DTNs by HTCondor’s BOSCO via SSH. After initialization, all the necessary HTCondor daemons are started automatically and the Pegasus binaries become available in the user’s shell. Finally, because HTCondor BOSCO that is running on the DTNs needs to communicate with the submit node, we are using socat to forward packets back to our pegasus-submit-pod. All the configuration required for this deployment has been automated using bootstrap scripts and entrypoint scripts in the template Dockerfile recipe our team provides. This makes this environment easily deployable and reproducible.

Note: Because Kubernetes pods are ephemeral we suggest using the shared file system to save your files and your workflow directories, so they can be persisted between pod restarts.

Finally, we have updated our tutorial executable pegasus-init to setup a workflow for you that allows you to run example workflows from this infrastructure to Summit. The tutorial can be found online here. We would encourage you to try it and welcome your feedback.

Relevant Links

Pegasus OLCF GitHub Repository: https://github.com/pegasus-isi/pegasus-olcf-kubernetes

Pegasus Summit Tutorial: https://pegasus.isi.edu/tutorial/summit/

5,325 views