The forthcoming Pegasus 5.1.0 release introduces significant updates to container support, further streamlining workflow execution and enhancing compatibility with container technologies. Pegasus has long supported containerization tools like Docker and Apptainer (formerly Singularity), enabling users to encapsulate their software environments for consistent and portable workflow execution. A substantial portion of our user base already leverages these technologies to package and deploy their applications.

Key Updates in Version 5.1.0

Refined Data Transfer Mechanisms for Containerized Jobs

PegasusLite now offers two distinct approaches for handling data transfers in containerized jobs. The shift to host-based transfers as the default aims to simplify workflows and minimize the overhead associated with customizing container images.

- Host-Based Transfers (Default in 5.1.0): Input and output data are staged on the host operating system before launching the container. This method utilizes pre-installed data transfer tools on the host, reducing the need for additional configurations within the container.

- Container-Based Transfers: Data transfers occur within the container prior to executing user code. This approach requires the container image to include necessary data transfer utilities like curl, ftp, or globus-online. Users preferring this method can set the property pegasus.transfer.container.onhost to false in their configuration files.

Integration with HTCondor’s Container Universe

Pegasus 5.1.0 introduces support for HTCondor’s container universe, allowing seamless execution of containerized jobs without relying on external wrappers like PegasusLite. This integration simplifies job submission and execution, particularly in environments where HTCondor’s container universe is available.

This enhancement builds upon Pegasus’s initial container support introduced in version 4.8.0, reflecting ongoing efforts to improve compatibility and user experience.

Overall, these updates in Pegasus 5.1.0 are designed to provide users with greater flexibility and efficiency when working with containerized workflows.

1. Data Transfers for jobs when running in Container

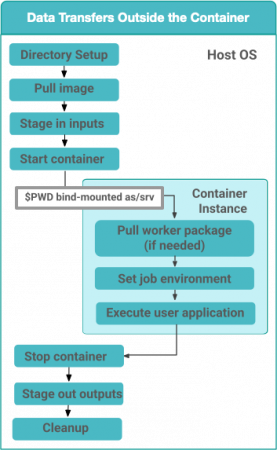

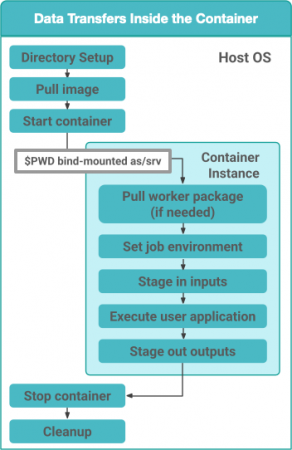

When a job is specified to run in an application container such as Docker or Singularity, Pegasus now has two options in PegasusLite on how data transfers for the job occur. The transfers can happen either

- on the HOST OS before the container in which the job has to execute is invoked OR

- inside the application container, before the user code is invoked.

|

|

| Approach 1: Data Transfers setup to be on the HOST OS.

The new default approach. |

Approach 2 Data Transfers setup to be inside the Container |

Starting 5.1.0, Pegasus will use approach 1 (transfers on the HOST OS before container invocation) as the default approach.

Both the approaches have their own advantages and disadvantages. Prior to 5.1.0 release, Pegasus only handled/invoke data transfers for the job inside the application container before the user code was invoked (Approach 2).

Approach 1: Data Transfers setup to be on the HOST OS

With Pegasus 5.1.0, Pegasus now has support for doing the data transfers required for the job on the HOST OS, before the application container is launched. The primary reasoning for this is that most probably the execution environment (local HPC cluster, OSPool, ACCESS sites etc) would already have the data transfer tools pre installed and available on the worker nodes.

Keeping this in mind, we have decided to make the HOST OS data transfers the default option.

Approach 2: Data Transfers setup inside the container

This approach had the flexibility for users to install their own data transfer clients in their container that were not installed on the HOST OS for doing the data transfers. However, this also meant the users application containers needed to be packaged with standard data transfer clients such as curl, ftp, globus-online etc. Often, this could be cumbersome when using third party containers, as the users needed to update the container definition files and build their own containers to install the missing data transfer tools.

For users who want to use this approach (which was the default prior to 5.1.0 release), can do so by setting the property

pegasus.transfer.container.onhost to false in their properties file.

2. Support for HTCondor container universe

When we first introduced support for containers in Pegasus in 4.8.0 in 2017, HTCondor did not have first class support for containers. In addition, any support for containers was tied to when running in pure HTCondor environments, and not available for example when running in grid universe (used for submitting jobs to local HPC clusters). Keeping this in mind, and also to have the most flexibility we decided to manage the staging of the application container for the job in the PegasusLite job wrapper that gets invoked when a job is launched in the worker node. This allowed us to bring container support to all the environments Pegasus supports.

While these limitations still exist, HTCondor support for containers has greatly improved in pure HTCondor environments such as Path and OSPool. Keeping this in mind, we have also put in support for “container” universe in Pegasus whereby we delegate the container management (invoking the container, launching the user job, stopping the container) to HTCondor for those environments. In order to enable this, you would need to add a condor profile with key universe and value set to “container” for your execution site in the Site Catalog.

A sample YAML snippet is included below

– name: condorpool

arch: x86_64

os.type: linux

directories: []

profiles:

condor: {universe: container}

pegasus: {style: condor, clusters.num: 1}

Please note that at this point, we only support Apptainer/Singularity containers to be launched in the container universe. When enabled, Pegasus will stage-in the container as part of the data stage-in nodes in the executable workflow, and place them in the user submit directory. The container then gets transferred to the worker node where the user job is launched using in-built HTCondor file transfers.

In case you are symlinking of data or using the shared filesystem for data staging and want those directories to be mounted in the container, you need to update the EP (Execution Point) condor configuration to specify the variable SINGULARITY_BIND_EXPR . More details can be found in the HTCondor documentation here .

Want to try out Pegasus 5.1.0 ?

We are just couple of weeks away from the release. If you would like to try out the nightly builds, you can grab them from our download server here.