The Pegasus team is very happy to hear about LIGO’s incredible discovery: the first detection of gravitational waves from colliding black holes. We congratulate the entire LIGO Scientific Collaboration and the Virgo Collaboration on this incredible achievement. The Pegasus team is very pleased to have contributed to LIGO’s software infrastructure. One of the main analysis pipelines used by LIGO to detect the gravitational wave was executed using Pegasus Workflow Management System (WMS). The pyCBC analysis pipeline is described below and uses Pegasus to run on varied infrastructure ranging from LIGO Data Grid, XSEDE to Open Science Grid. Read more about the detection here.

Laser Interferometer Gravitational Wave Observatory (LIGO) is a network of gravitational-wave detectors, with observatories in Livingston, LA and Hanford, WA. The observatories’ mission is to detect and measure gravitational waves predicted by general relativity─Einstein’s theory of gravity─in which gravity is described as due to the curvature of the fabric of time and space. One well-studied phenomenon which is expected to be a source of gravitational waves is the inspiral and coalescence of a pair of dense, massive astrophysical objects such as neutron stars and black holes. Such binary inspiral signals are among the most promising sources for LIGO. Gravitational waves interact extremely weakly with matter, and the measurable effects produced in terrestrial instruments by their passage will be miniscule. In order to increase the probability of detection, a large amount of data needs to be acquired and analyzed which contains the strain signal that measures the passage of gravitational waves. LIGO applications often require on the order of a terabyte of data to produce meaningful results.

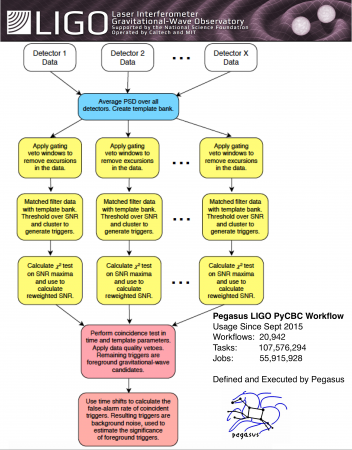

Advanced LIGO pyCBC Workflows

The LIGO Laboratory started the first Observing Run ‘O1’ with the Advanced LIGO detectors in September 2015 at a sensitivity roughly 4 times greater than Initial LIGO for some classes of sources (e.g., neutron-star binaries), and a much greater sensitivity for larger systems with th

eir peak radiation at lower audio frequencies. The pyCBC analysis workflows are modeled as a single stage pipeline that can analyze data from both LIGO and VIRGO detectors.

These workflows are executed using Pegasus on a variety of resources such as local campus clusters, LIGO Data Grid, OSG, XSEDE in combination with Pegasus MPI Cluster and VIRGO Clusters.

Each pyCBC workflow has

- 60,000 compute tasks

- Input Data: 5000 files (10GB total)

- Output Data: 60,000 files (60GB total)

The incorporation of the Pegasus into the production pipeline has enabled LIGO users to

- Run an analysis workflows across sites. Analysis workflows are launched to execute on XSEDE and OSG resources with post processing steps running on LIGO Data Grid.

- Monitor and share workflows using the Pegasus Workflow Dashboard.

- Easier debugging of their workflows.

- Separate their workflow logs directories from the execution directories. Their earlier pipeline required the logs to be the shared filesystem of the clusters. This resulted in scalability issues as the load on the NFS increased drastically when large workflows were launched.

- Ability to re-run analysis later on without running all the sub workflows from start. This leverages the data reuse capabilities of Pegasus. LIGO data may need to be analyzed several times due to changed in e.g. detector calibration or data-quality flags. Complete re-analysis of the data is a very computationally intensive task. By using the workflow reduction capabilities of Pegasus, the LSC and Virgo have been able to re-use existing data products from previous runs, when those data products are suitable.

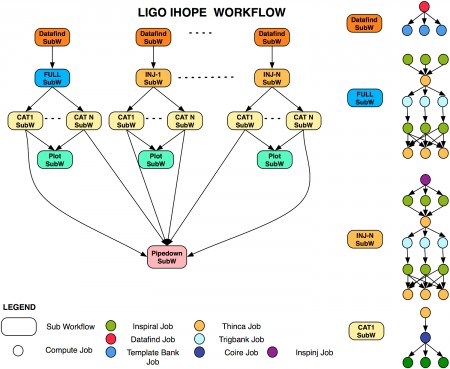

LIGO Inspiral Group Workflows

Until 2011, the LIGO CBC Group used to execute a 2 stage analysis pipeline called iHOPE. These workflows were executed both on the LIGO Data Grid (LDG) and OSG using Pegasus. Although the workflows were smaller than SCEC”s in terms of numbers of tasks, they wer much more data intensive, operating on Terabyte size data (in one workflow). Our flexible storage interfaces allowed us to integrate Pegasus with SRM, a storage solution used on OSG. LIGO leveraged this capability to automatically stage and access workflow input and output data via SRM using temporary local storage on each compute node. These workflows were executed on LDG or OSG resources.

The CBC group in LIGO used Pegasus as it’s workflow management tool for the sixth science run (S6) which observed data June 2009-October 2010. The Pegasus Team was part of a joint OSG LIGO taskforce that aimed to address the data management issues that arise while running the large-scale workflows on Open Science Grid (OSG).

A single iHope workflow above consists of several large sub workflows each doing analysis on the same input dataset. Several advanced features of Pegasus were used to optimize the run time of the iHope workflows as well as to make them run reliably such as job clustering, hierarchal workflows, throttling, priorities, retries, data clustering.

Publications:

LIGO Detection Paper on Gravitational Waves: Observation of Gravitational Waves from a Binary Black Hole Merger

Article in ISGTW on searching for Gravitational Waves using Pegasus: Looking for gravitational waves: A computing perspective

Gurmeet Singh, Karan Vahi, Arun Ramakrishnan, Gaurang Mehta, Ewa Deelman, Henan Zhao, Rizos Sakellariou, Kent Blackburn, Duncan Brown, Stephen Fairhurst, David Meyers, G. Bruce Berrim

an. Optimizing Workflow Data Footprint Special issue of the Scientific Programming Journal dedicated to Dynamic Computational Workflows: Discovery, Optimisation and Scheduling, 2007.

Arun Ramakrishnan, Gurmeet Singh, Henan Zhao, Ewa Deelman, Rizos Sakellariou, Karan Vahi, Kent Blackburn , David Meyers and Michael Samidi. “Scheduling Data-Intensive Workflows onto Storage-Constrained Distributed Resources”, Seventh IEEE International Symposium on Cluster Computing and the Grid – CCGrid 2007

D. A. Brown, P. R. Brady, A. Dietz, J. Cao, B. Johnson, and J. McNabb, “A Case Study on the Use of Workflow Technologies for Scientific Analysis: Gravitational Wave Data Analysis,” in Workflows for e-Science, I. Taylor, et al., Eds., ed: Springer, 2006.

G Singh, E Deelman, G Mehta, K Vahi, Mei Su, B. Berriman, J Good, J Jacob, D Katz, A Lazzarini, K Blackburn, S Koranda, “The Pegasus Portal: Web Based Grid Computing” The 20th Annual ACM Symposium on Applied Computing, Santa Fe, New Mexico, March 13 -17, 2005

Ewa Deelman, James Blythe, Yolanda Gil, Carl Kesselman, Scott Koranda, Albert Lazzarini, Gaurang Mehta, Maria Alessandra Papa, Karan Vahi, Pegasus and the Pulsar Search: From Metadata to Execution on the Grid. Applications Grid Workshop, PPAM 2003, Czestochowa, Poland 2003

E. Deelman, C. Kesselman, G. Mehta, L. Meshkat, L. Pearlman, K. Blackburn, P. Ehrens, A. Lazzarini, R. Williams, S. Koranda. GriPhyN and LIGO, building a virtual data grid for gravitational wave scientists High Performance Distributed Computing, 2002. HPDC-11 2002. Page(s): 225 -234

Scientists:

Syracuse: Duncan Brown, Larne Pekowsky , Alex Nitz , Ian Harry, Samantha Usman

Caltech: Stuart Anderson, Peter Couvares, Kent Blackburn, Britta Dauderts, Robert Engel