Authors: G. Bruce Berriman (Caltech/IPAC-NExScI), John C. Good (Caltech/IPAC-NExScI), Ewa Deelman (USC ISI), Karan Vahi (USC ISI), Ryan Tanaka (USC ISI)

The Montage Toolkit

An abundance of high resolution images of the sky are being captured on a regular basis by high powered telescopes. Each of these individual images represent a detailed, minute, portion of the sky, however they do not capture large objects such as galaxy clusters in their entirety. To study such objects, scientists need to be able to view these objects as complete units.

Since its inception in 2002, the Montage Image Mosaic Toolkit [1] has enabled astronomers and researchers to seamlessly stitch together massive mosaics of the sky using upwards of millions of high resolution images. Montage ensures that mosaics are accurately generated by preserving spatial and calibration fidelity of all input images. Part of Montage’s success within the scientific community can be attributed to its modular design. Montage provides tools which can analyze the geometry of images, perform re-projection, rectify background emissions to common levels (a unique feature of Montage), and perform co-addition of images. Each tool can be run on its own or as part of a larger Montage pipeline. The entire codebase is written in C, and as such will run on desktops, clusters, and computational grids running Linux/Unix operating systems. Given the amount of input data required to generate a mosaic of the sky, and the complexity of a production Montage pipeline, Pegasus serves as an ideal workflow management system that can enable researchers to build and run such workflows.

Building Montage Pipelines with Pegasus

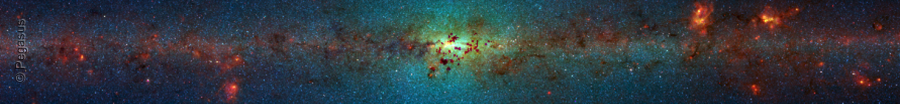

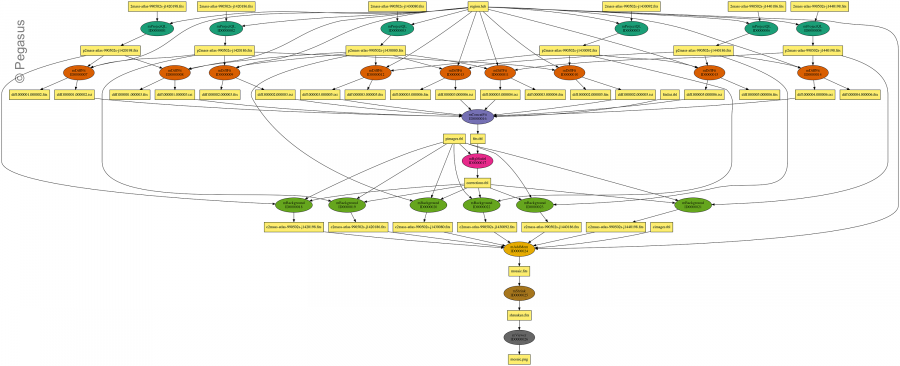

The workflow shown in Figure 2 was developed as a proof of concept using the Pegasus 5.0 Python API. This workflow will generate a mosaic of the Omega Nebula (also known as M17) shown in Figure 2 using data obtained from the Two Micron All Sky Survey (2MASS) [2]. M17 is between 5,000 and 6,000 light-years from Earth and spans some 15 light-years in diameter. M17 is of great interest to astronomers because it is one of the brightest and most massive star-forming regions of our galaxy.

Figure 3 illustrates a small version of this Montage workflow. Manually managing dependencies between jobs can be a challenge, especially as your workflow increases in size, however the Pegasus 5.0 Python API conveniently infers these dependencies based on the input and output files specified for each job and ensures that workflow execution proceeds accordingly. Another great feature of Pegasus is that executables are abstracted away from the notion of a job. This means that you can define an executable once, and reuse it for multiple jobs, which may take different command line arguments when run. In this workflow, we utilized the following Montage Toolkit components: mProjectQL, mDiffFit, mConcatFits, mBgModel, mBackground, mAdd, mShrink, and mViewer. Using this as a starting point, you can build your own Montage workflow to start generating seamless mosaics.

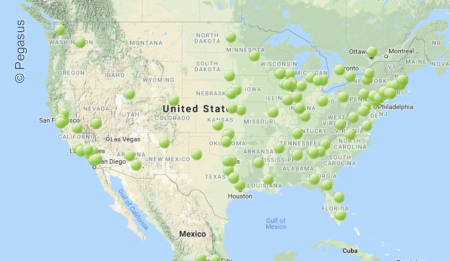

Scaling Up on the OSG

Local resources such as a laptop or desktop may not provide the amount of cores necessary to capitalize on the parallelizable regions of larger Montage workflows. Allocating cloud resources to handle this computation is one solution, however, a more cost effective (free) approach would be to run our Pegasus workflow on the Open Science Pool (OSPool), a service of the OSG [3,4], which provides access to shared computing and data resources. Figure 4 illustrates where OSPool resources are located within the U.S. Any U.S. researcher may request an account at osgconnect.net. One advantage of running on the OSG is that OSG Connect login nodes come with the latest version of Pegasus, pre-configured out of the box so no installation or setup is required. Furthermore, you can run the Montage workflow developed for the ADASS 21 demo on OSG without any major changes.

With a few minimal changes to our Pegasus workflow generation script, we configured this workflow to utilize two key features of the OSG, container support and the handling of data movements by the StacheCache [5] data infrastructure. If your workflow jobs have additional software dependencies, these can be packaged up into a Docker or Singularity container. OSG will ensure that your Pegasus jobs run within their specified containers, which are cached on OSG’s CernVM file system (CVMFS). StacheCache caches your files in an opportunistic manner such that subsequent jobs utilize files located in the nearest cache.

Our initial run on OSG comprised 332 jobs and took 54 minutes and 43 seconds. OSG has access to upwards of 270,000 cpu cores, thus we have the potential to run much larger Montage workflows in the future. More information on running Pegasus workflows on OSG can be found here.

Watch the ADASS 21 Demo

Try it out!

The ADASS 21 demo content has been containerized so that interested users can easily reproduce what was done in the demo. This container includes the Jupyter notebook presented in the demo and an installation of Pegasus and HTCondor. Access the Docker image here.

Acknowledgments

Montage is funded by the National Science Foundation grant awards 1835379, 1642453, and 1440620.

The Pegasus Workflow Management Software funded by the National Science Foundation under grant #1664162.

This research was done using resources provided by the OSG [4,5], which is supported by the National Science Foundation award #2030508.

References

- Berriman, G., & Good, J. (2017). The Application of the Montage Image Mosaic Engine To The Visualization Of Astronomical Images. PASP, 129(975), 058006.

- The Two Micron All Sky Survey (2MASS) M.F. Skrutskie, R.M. Cutri, R. Stiening, M.D. Weinberg, S. Schneider, J.M. Carpenter, C. Beichman, R. Capps, T. Chester, J. Elias, J. Huchra, J. Liebert, C. Lonsdale, D.G. Monet, S. Price, P. Seitzer, T. Jarrett, J.D. Kirkpatrick, J. Gizis, E. Howard, T. Evans, J. Fowler, L. Fullmer, R. Hurt, R. Light, E.L. Kopan, K.A. Marsh, H.L. McCallon, R. Tam, S. Van Dyk, and S. Wheelock, 2006, AJ, 131, 1163. (Bibliographic Code: 2006AJ….131.1163S)

- Pordes, R. et al. (2007). “The Open Science Grid”, J. Phys. Conf. Ser. 78, 012057.doi:10.1088/1742-6596/78/1/012057.

- Sfiligoi, I., Bradley, D. C., Holzman, B., Mhashilkar, P., Padhi, S. and Wurthwein, F. (2009). “The Pilot Way to Grid Resources Using glideinWMS”, 2009 WRI World Congress on Computer Science and Information Engineering, Vol. 2, pp. 428–432. doi:10.1109/CSIE.2009.950.

- Derek Weitzel and Marián Zvada and Ilija Vukotic and Robert W. Gardner and Brian Bockelman and Mats Rynge and Edgar Fajardo Hernandez and Brian Lin and Matyas Selmeci (2019). StashCache: A Distributed Caching Federation for the Open Science Grid. CoRR, abs/1905.06911.